It’s been a while since I last posted here, so I thought I’d post an update. I’m sure people are interested in the new core, but unfortunately real life (ie., desperately trying to finish my PhD) has got in the way so I have had limited time in the last several months.

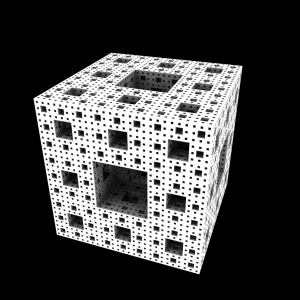

While progress has been slow in that area, there’s been a recent need for more advanced illumination techniques, and since this is a much smaller project I have taken it on in the meantime. The aim is to implement point cloud based global illumination, inspired primarily by the paper Point-Based Approximate Color Bleeding from Pixar (by Christensen, 2008) but also by Micro-Rendering for Scalable, Parallel Final Gathering by Ritschel et al.. I’d like to emphasize that while these are somewhat approximate techniques due to the hierarchical point cloud representation, they rely on rendering micro environment buffers of the scene at each point, and so correctly take occlusion information into account. This is in contrast to earlier, more approximate point based algorithms which produce similar results in some circumstances. On the downside, microbuffer based algorithms are inevitably slower. I’ve already made some progress in the “pointrender” branch in the aqsis git repo, and I’m keen to show some test pictures. These show pure ambient occlusion lighting, but doing colour bleeding is a very simple extension. The geometry below was generated by an updated version of the example fractal generation procedural. The procedural previously was designed to generate Menger sponge fractals, but I’ve updated it to allow arbitrary subdivision motifs and to avoid the redundant faces which used to be created. First, the original:

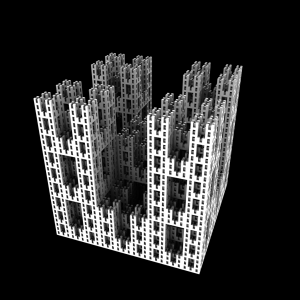

A kind of turrets motif:

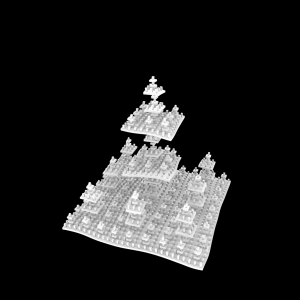

and, for good measure, some displacement. Displacement does slow things down in some of these cases, but only to the extent that the displacement bounds cause more geometry to be generated and shaded.

As to performance, I’m not particularly happy with that at this stage. I can’t remember the exact timings, but the above images took perhaps half an hour or so to generate at 1024×1024 on a single core without any “cheating” to speed things up. That is, no occlusion interpolation or anything like that, so one occlusion query per shading point. With a bit of judicious cheating I think we can get this down significantly, hopefully to a few minutes per frame at a shading rate of 1. That’s all for now, see you next time!